Pi.ai just got eaten by Microsoft

Millions of people shared their intimate thoughts with Inflection.ai and Pi.ai, just to find out Microsoft now owns all of it.

Yesterday, Microsoft made headlines by essentially absorbing Inflection.ai and its personal AI product, Pi, along with its founders and a significant part of its team, following a $1.3 billion investment last year. This move has sparked concerns over privacy and data ownership among users who trusted Pi with their personal thoughts.

I’m Yngvi Karlson, Co-Founder of Kin. Born in the Faroe Islands, I’ve spent my career building startups, with two exits along the way, and five years as an active venture capitalist. Now, I’m dedicated to creating Kin, a personal AI people can truly trust.

Follow me on LinkedIn, TikTok and X

What Happened

Inflection AI, once celebrated for its innovative chatbot Pi.ai, aimed to revolutionize how artificial intelligence could interact in a conversational manner and offer a more empathic experience. The startup, led by co-founder Mustafa Suleyman, initially focused on developing a unique AI assistant. However, instead of solely focusing on the user experience and product development, Inflection shifted their energy into building their own large language models (LLMs), which likely led to substantial funding requirements and subsequent challenges as Pi struggled to compete with rapid advancements from other AI giants in the generative AI space.

Microsoft's acquisition of Inflection and its intellectual property marks a significant shift in the AI market landscape. The tech giant's move raises questions about the future of user data and the fate of innovation in the sector, especially as regulators scrutinize such consolidations. This development follows broader industry trends, with companies like OpenAI, Anthropic, and DeepMind making significant strides in AI development.

The takeover of Pi's chatbot technology underscores a growing trend: large corporations consolidating their grip on the AI market through strategic acquisitions. For users who value privacy and control over their data, this development should be alarming, particularly as Microsoft continues to expand its AI products and services portfolio, including Copilot and Azure-based solutions. It highlights the importance of supporting AI technologies that prioritize user-centric values.

Hopefully Pi will offer its users to get a copy of their data and get it deleted from their (and Microsoft's) servers because as it says in their privacy policies:

“We may use personal information to….. respond to subpoenas or requests from government authorities.” 👀

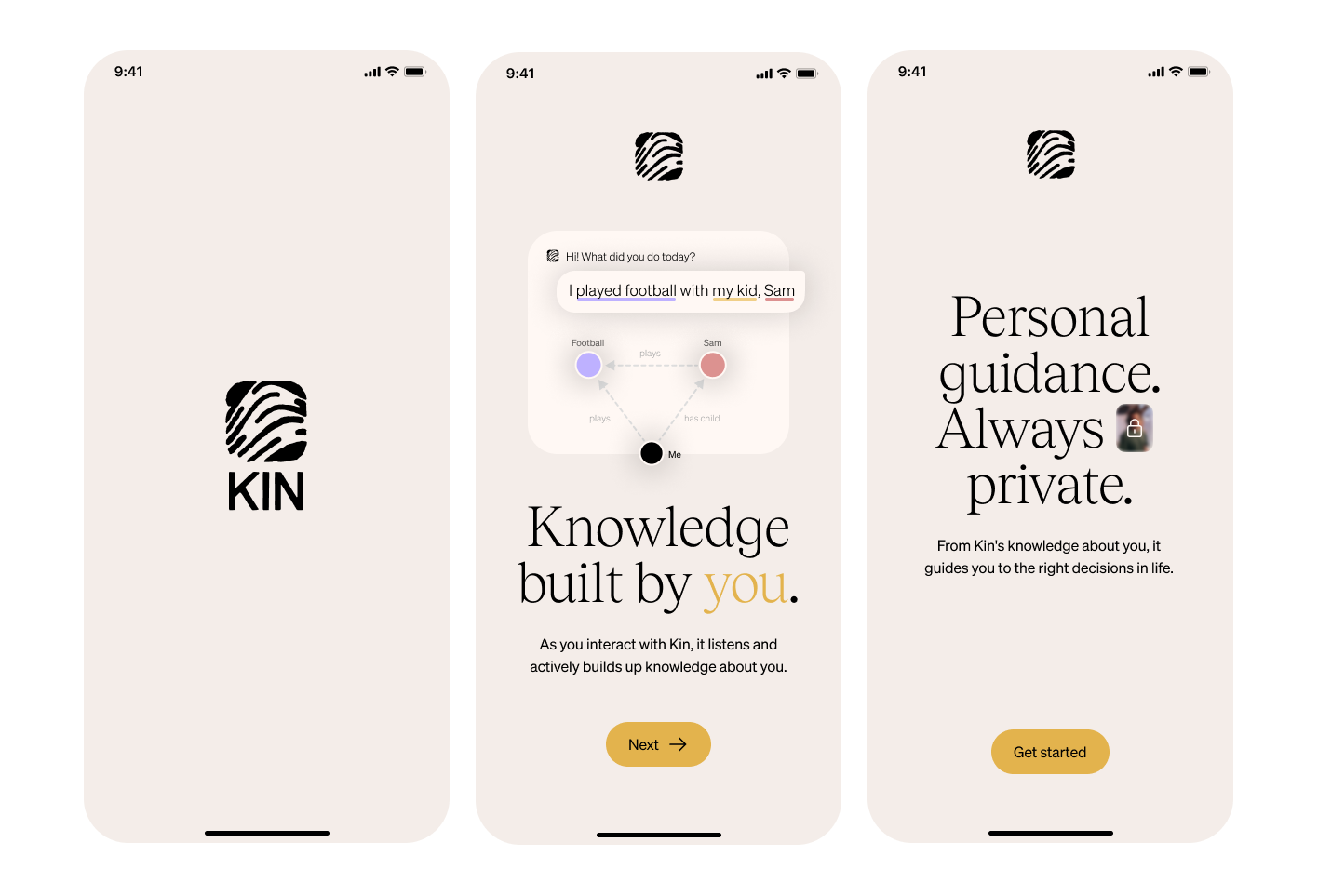

This is exactly one of the main reasons Kin is being built as it is: giving individuals control over their data and encrypting it on their device, so we as a company don't store it or have access to it. As the landscape of AI assistants continues to evolve, maintaining user trust through maintaining privacy and data sovereignty becomes increasingly crucial.

Missed this first time but I believe that subhead should read intimate not intimidate ;)

Literally only 15 minutes or so into discovering KIN, so many boxes ticked already.

First AI presence I've seen that leaps into the ethical sides of AI, primarily safety of user data - not done any deep dives, but looks comprehensive so far.

My only questions might be around legal structure and what happens in the event of debt failure etc, but all in good time, look forward to learning more about the app.